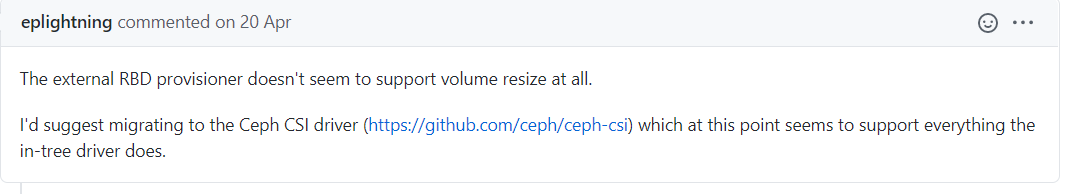

最近测试ceph rbd在kubernetes的自动扩容问题,之前K8s v1.11.0时的策略是先找到目标卷,使用rbd resize命令对此卷扩容,找到挂载此卷的客户端宿主机,执行xfs_growfs等刷新文件系统的命令。查看网上资料k8s 在1.15版本后,ExpandInUsePersistentVolume功能被开启。意思大概就是不需要挂载到容器即可扩容PVC。按网上的手册设置相关参数并没啥用,发现github有人提到ceph-csi可以实现自动扩容pvc的功能。

这里简单说下csi是啥,全称是Container Storage Interface,旨在能为容器编排引擎和存储系统间建立一套标准的存储调用接口,通过该接口能为容器编排引擎提供存储服务。

csi之前,k8s提供的存储服务通过一种“in-tree”的方式提供的,这种方式需要将存储提供者的代码逻辑放到K8S的代码库中运行,调用引擎与插件间属于强耦合。

环境描述

k8s版本:kubernetes v1.17.0

ceph版本:ceph mimic

| 主机名 |

ip地址 |

系统版本\内核版本 |

角色 |

| ceph1 |

192.168.186.10 |

centos 7.6\3.10.0-957.el7.x86_64 |

K8s_master,ceph mon,osd,mds |

| ceph2 |

192.168.186.11 |

centos 7.6\3.10.0-957.el7.x86_64 |

K8s_node,ceph mon,osd,mds |

| ceph3 |

192.168.186.12 |

centos 7.6\3.10.0-957.el7.x86_64 |

K8s_node,ceph mon,osd,mds |

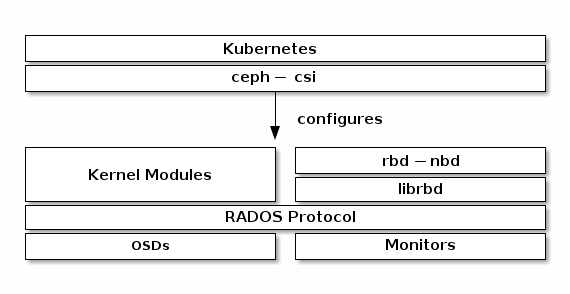

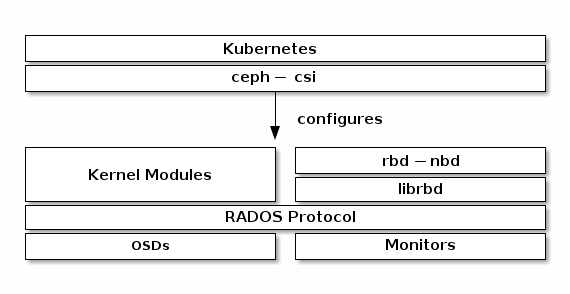

整体架构图

根据ceph官方描述:

ceph-csi默认情况下使用RBD内核模块,这些模块可能不支持所有Ceph CRUSH可调参数或RBD图像功能。

安装步骤

我本地已经提前安装好了kubernetes与ceph,以下仅叙述如何对接。

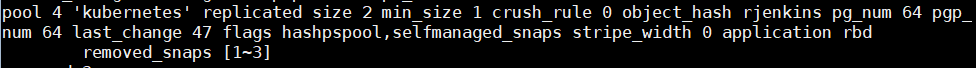

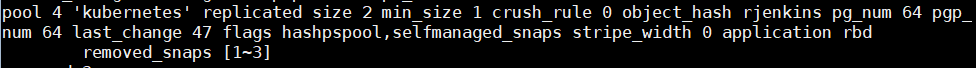

1. 创建存储池

ceph在L版本之后就不会创建默认的rbd池了,我们需要建立一个单独的存储池给kubernetes使用。

1

| $ ceph osd pool create kubernetes 64 64

|

初始化新创建的池。

这里的初始化池操作在jewel版本是不需要的,jewel之后的版本在创建了池后还需要开启对应的应用授权(rbd,cephfs,rgw),命令为

ceph osd pool application enable <pool-name> <app-name>

1

| $ rbd pool init kubernetes

|

这里查看创建的kubernetes池自动加入了rbd池。

2. 配置ceph-csi

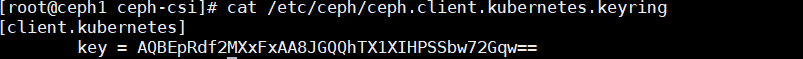

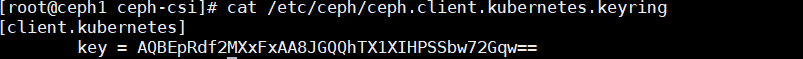

设置ceph客户端身份验证。

官方提供的命令是:

ceph auth get-or-create client.kubernetes mon 'profile rbd' osd 'profile rbd pool=kubernetes' mgr 'profile rbd pool=kubernetes'

1

| $ ceph auth get-or-create client.kubernetes mon 'allow r' osd 'allow rwx pool=kubernetes' -o ceph.client.kubernetes.keyring

|

生成文件中的key使用user的key,后面配置中是需要用到的

3. 生成ceph-csi的configmap

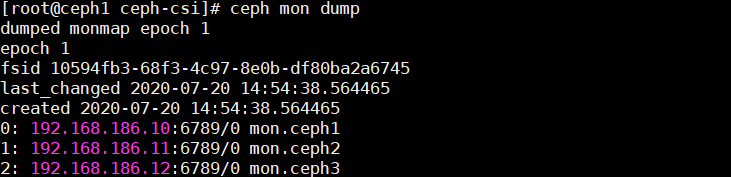

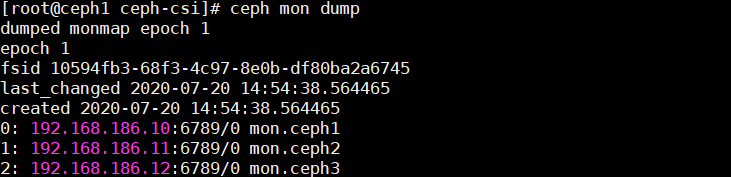

这里一共有两个需要使用的信息,第一个是fsid(集群id),第二个是监控节点信息。

看到有人查询到的监控节点信息有2个版本(v1和v2),目前的ceph-csi只支持V1版本的协议,所以监控节点那里我们只能用v1的那个IP和端口号,我这里不需要改动

编写对应的configmap。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

| ---

apiVersion: v1

kind: ConfigMap

data:

config.json: |-

[

{

"clusterID": "10594fb3-68f3-4c97-8e0b-df80ba2a6745",

"monitors": [

"192.168.186.10:6789",

"192.168.186.11:6789",

"192.168.186.12:6789"

]

}

]

metadata:

name: ceph-csi-config

|

部署:

1

| $ kubectl apply -f csi-config-map.yaml

|

4. 生成ceph-csi认证的secret

1

2

3

4

5

6

7

8

9

| ---

apiVersion: v1

kind: Secret

metadata:

name: csi-rbd-secret

namespace: default

stringData:

userID: kubernetes

userKey: AQBEpRdf2MXxFxAA8JGQQhTX1XIHPSSbw72Gqw==

|

这里就用到了之前生成的用户的用户id(kubernetes)和key

部署:

1

| $ kubectl apply -f csi-rbd-secret.yaml

|

5. 配置ceph-csi插件

这里的插件就是配置kubernetes上的rbac和提供存储功能的容器。

1

| $ kubectl apply -f csi-provisioner-rbac.yaml

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

| $ cat csi-provisioner-rbac.yaml

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: rbd-csi-provisioner

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: rbd-external-provisioner-runner

aggregationRule:

clusterRoleSelectors:

- matchLabels:

rbac.rbd.csi.ceph.com/aggregate-to-rbd-external-provisioner-runner: "true"

rules: []

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: rbd-external-provisioner-runner-rules

labels:

rbac.rbd.csi.ceph.com/aggregate-to-rbd-external-provisioner-runner: "true"

rules:

- apiGroups: [""]

resources: ["nodes"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["secrets"]

verbs: ["get", "list"]

- apiGroups: [""]

resources: ["events"]

verbs: ["list", "watch", "create", "update", "patch"]

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "update", "delete", "patch"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: [""]

resources: ["persistentvolumeclaims/status"]

verbs: ["update", "patch"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: ["snapshot.storage.k8s.io"]

resources: ["volumesnapshots"]

verbs: ["get", "list"]

- apiGroups: ["snapshot.storage.k8s.io"]

resources: ["volumesnapshotcontents"]

verbs: ["create", "get", "list", "watch", "update", "delete"]

- apiGroups: ["snapshot.storage.k8s.io"]

resources: ["volumesnapshotclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: ["storage.k8s.io"]

resources: ["volumeattachments"]

verbs: ["get", "list", "watch", "update", "patch"]

- apiGroups: ["storage.k8s.io"]

resources: ["csinodes"]

verbs: ["get", "list", "watch"]

- apiGroups: ["snapshot.storage.k8s.io"]

resources: ["volumesnapshotcontents/status"]

verbs: ["update"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: rbd-csi-provisioner-role

subjects:

- kind: ServiceAccount

name: rbd-csi-provisioner

namespace: default

roleRef:

kind: ClusterRole

name: rbd-external-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

namespace: default

name: rbd-external-provisioner-cfg

rules:

- apiGroups: [""]

resources: ["configmaps"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: ["coordination.k8s.io"]

resources: ["leases"]

verbs: ["get", "watch", "list", "delete", "update", "create"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: rbd-csi-provisioner-role-cfg

namespace: default

subjects:

- kind: ServiceAccount

name: rbd-csi-provisioner

namespace: default

roleRef:

kind: Role

name: rbd-external-provisioner-cfg

apiGroup: rbac.authorization.k8s.io

|

1

| $ kubectl apply -f csi-nodeplugin-rbac.yaml

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

| $ cat csi-nodeplugin-rbac.yaml

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: rbd-csi-nodeplugin

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: rbd-csi-nodeplugin

aggregationRule:

clusterRoleSelectors:

- matchLabels:

rbac.rbd.csi.ceph.com/aggregate-to-rbd-csi-nodeplugin: "true"

rules: []

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: rbd-csi-nodeplugin-rules

labels:

rbac.rbd.csi.ceph.com/aggregate-to-rbd-csi-nodeplugin: "true"

rules:

- apiGroups: [""]

resources: ["nodes"]

verbs: ["get"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: rbd-csi-nodeplugin

subjects:

- kind: ServiceAccount

name: rbd-csi-nodeplugin

namespace: default

roleRef:

kind: ClusterRole

name: rbd-csi-nodeplugin

apiGroup: rbac.authorization.k8s.io

|

1

| $ kubectl apply -f csi-rbdplugin-provisioner.yaml

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

| $ cat csi-rbdplugin-provisioner.yaml

---

kind: Service

apiVersion: v1

metadata:

name: csi-rbdplugin-provisioner

labels:

app: csi-metrics

spec:

selector:

app: csi-rbdplugin-provisioner

ports:

- name: http-metrics

port: 8080

protocol: TCP

targetPort: 8680

---

kind: Deployment

apiVersion: apps/v1

metadata:

name: csi-rbdplugin-provisioner

spec:

replicas: 3

selector:

matchLabels:

app: csi-rbdplugin-provisioner

template:

metadata:

labels:

app: csi-rbdplugin-provisioner

spec:

serviceAccount: rbd-csi-provisioner

containers:

- name: csi-provisioner

image: quay.io/k8scsi/csi-provisioner:v1.6.0

args:

- "--csi-address=$(ADDRESS)"

- "--v=5"

- "--timeout=150s"

- "--retry-interval-start=500ms"

- "--enable-leader-election=true"

- "--leader-election-type=leases"

- "--feature-gates=Topology=true"

env:

- name: ADDRESS

value: unix:///csi/csi-provisioner.sock

imagePullPolicy: "IfNotPresent"

volumeMounts:

- name: socket-dir

mountPath: /csi

- name: csi-snapshotter

image: quay.io/k8scsi/csi-snapshotter:v2.1.0

args:

- "--csi-address=$(ADDRESS)"

- "--v=5"

- "--timeout=150s"

- "--leader-election=true"

env:

- name: ADDRESS

value: unix:///csi/csi-provisioner.sock

imagePullPolicy: "IfNotPresent"

securityContext:

privileged: true

volumeMounts:

- name: socket-dir

mountPath: /csi

- name: csi-attacher

image: quay.io/k8scsi/csi-attacher:v2.1.1

args:

- "--v=5"

- "--csi-address=$(ADDRESS)"

- "--leader-election=true"

- "--retry-interval-start=500ms"

env:

- name: ADDRESS

value: /csi/csi-provisioner.sock

imagePullPolicy: "IfNotPresent"

volumeMounts:

- name: socket-dir

mountPath: /csi

- name: csi-resizer

image: quay.io/k8scsi/csi-resizer:v0.5.0

args:

- "--csi-address=$(ADDRESS)"

- "--v=5"

- "--csiTimeout=150s"

- "--leader-election"

- "--retry-interval-start=500ms"

env:

- name: ADDRESS

value: unix:///csi/csi-provisioner.sock

imagePullPolicy: "IfNotPresent"

volumeMounts:

- name: socket-dir

mountPath: /csi

- name: csi-rbdplugin

securityContext:

privileged: true

capabilities:

add: ["SYS_ADMIN"]

image: quay.io/cephcsi/cephcsi:canary

args:

- "--nodeid=$(NODE_ID)"

- "--type=rbd"

- "--controllerserver=true"

- "--endpoint=$(CSI_ENDPOINT)"

- "--v=5"

- "--drivername=rbd.csi.ceph.com"

- "--pidlimit=-1"

- "--rbdhardmaxclonedepth=8"

- "--rbdsoftmaxclonedepth=4"

env:

- name: POD_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

- name: NODE_ID

valueFrom:

fieldRef:

fieldPath: spec.nodeName

- name: CSI_ENDPOINT

value: unix:///csi/csi-provisioner.sock

imagePullPolicy: "IfNotPresent"

volumeMounts:

- name: socket-dir

mountPath: /csi

- mountPath: /dev

name: host-dev

- mountPath: /sys

name: host-sys

- mountPath: /lib/modules

name: lib-modules

readOnly: true

- name: ceph-csi-config

mountPath: /etc/ceph-csi-config/

- name: keys-tmp-dir

mountPath: /tmp/csi/keys

- name: liveness-prometheus

image: quay.io/cephcsi/cephcsi:canary

args:

- "--type=liveness"

- "--endpoint=$(CSI_ENDPOINT)"

- "--metricsport=8680"

- "--metricspath=/metrics"

- "--polltime=60s"

- "--timeout=3s"

env:

- name: CSI_ENDPOINT

value: unix:///csi/csi-provisioner.sock

- name: POD_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

volumeMounts:

- name: socket-dir

mountPath: /csi

imagePullPolicy: "IfNotPresent"

volumes:

- name: host-dev

hostPath:

path: /dev

- name: host-sys

hostPath:

path: /sys

- name: lib-modules

hostPath:

path: /lib/modules

- name: socket-dir

emptyDir: {

medium: "Memory"

}

- name: ceph-csi-config

configMap:

name: ceph-csi-config

- name: keys-tmp-dir

emptyDir: {

medium: "Memory"

}

|

上面yaml文件中注释的部分是之前测试报错没有找到cm,官方文档没有创建此文件,这里注释掉无影响。

1

| $ kubectl apply -f csi-rbdplugin.yaml

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

| $ cat csi-rbdplugin.yaml

---

kind: DaemonSet

apiVersion: apps/v1

metadata:

name: csi-rbdplugin

spec:

selector:

matchLabels:

app: csi-rbdplugin

template:

metadata:

labels:

app: csi-rbdplugin

spec:

serviceAccount: rbd-csi-nodeplugin

hostNetwork: true

hostPID: true

dnsPolicy: ClusterFirstWithHostNet

containers:

- name: driver-registrar

securityContext:

privileged: true

image: quay.io/k8scsi/csi-node-driver-registrar:v1.3.0

args:

- "--v=5"

- "--csi-address=/csi/csi.sock"

- "--kubelet-registration-path=/data/kubelet/plugins/rbd.csi.ceph.com/csi.sock"

lifecycle:

preStop:

exec:

command: [

"/bin/sh", "-c",

"rm -rf /registration/rbd.csi.ceph.com \

/registration/rbd.csi.ceph.com-reg.sock"

]

env:

- name: KUBE_NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

volumeMounts:

- name: socket-dir

mountPath: /csi

- name: registration-dir

mountPath: /registration

- name: csi-rbdplugin

securityContext:

privileged: true

capabilities:

add: ["SYS_ADMIN"]

allowPrivilegeEscalation: true

image: quay.io/cephcsi/cephcsi:canary

args:

- "--nodeid=$(NODE_ID)"

- "--type=rbd"

- "--nodeserver=true"

- "--endpoint=$(CSI_ENDPOINT)"

- "--v=5"

- "--drivername=rbd.csi.ceph.com"

env:

- name: POD_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

- name: NODE_ID

valueFrom:

fieldRef:

fieldPath: spec.nodeName

- name: CSI_ENDPOINT

value: unix:///csi/csi.sock

imagePullPolicy: "IfNotPresent"

volumeMounts:

- name: socket-dir

mountPath: /csi

- mountPath: /dev

name: host-dev

- mountPath: /sys

name: host-sys

- mountPath: /run/mount

name: host-mount

- mountPath: /lib/modules

name: lib-modules

readOnly: true

- name: ceph-csi-config

mountPath: /etc/ceph-csi-config/

- name: plugin-dir

mountPath: /data/kubelet/plugins

mountPropagation: "Bidirectional"

- name: mountpoint-dir

mountPath: /data/kubelet/pods

mountPropagation: "Bidirectional"

- name: keys-tmp-dir

mountPath: /tmp/csi/keys

- name: liveness-prometheus

securityContext:

privileged: true

image: quay.io/cephcsi/cephcsi:canary

args:

- "--type=liveness"

- "--endpoint=$(CSI_ENDPOINT)"

- "--metricsport=8680"

- "--metricspath=/metrics"

- "--polltime=60s"

- "--timeout=3s"

env:

- name: CSI_ENDPOINT

value: unix:///csi/csi.sock

- name: POD_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

volumeMounts:

- name: socket-dir

mountPath: /csi

imagePullPolicy: "IfNotPresent"

volumes:

- name: socket-dir

hostPath:

path: /data/kubelet/plugins/rbd.csi.ceph.com

type: DirectoryOrCreate

- name: plugin-dir

hostPath:

path: /data/kubelet/plugins

type: Directory

- name: mountpoint-dir

hostPath:

path: /data/kubelet/pods

type: DirectoryOrCreate

- name: registration-dir

hostPath:

path: /data/kubelet/plugins_registry/

type: Directory

- name: host-dev

hostPath:

path: /dev

- name: host-sys

hostPath:

path: /sys

- name: host-mount

hostPath:

path: /run/mount

- name: lib-modules

hostPath:

path: /lib/modules

- name: ceph-csi-config

configMap:

name: ceph-csi-config

- name: keys-tmp-dir

emptyDir: {

medium: "Memory"

}

---

apiVersion: v1

kind: Service

metadata:

name: csi-metrics-rbdplugin

labels:

app: csi-metrics

spec:

ports:

- name: http-metrics

port: 8080

protocol: TCP

targetPort: 8680

selector:

app: csi-rbdplugin

|

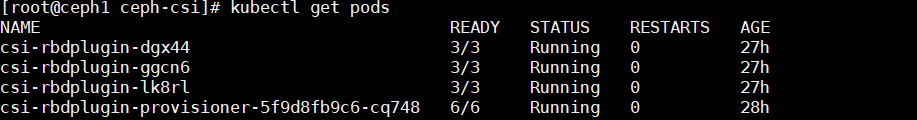

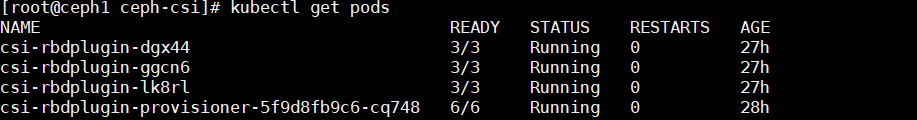

查看容器是否正常运行。

6. 使用ceph块设备

创建storageclass

1

| $ kubectl apply -f csi-rbd-sc-filesystem.yaml

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

| $ cat csi-rbd-sc-filesystem.yaml

---

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: csi-rbd-sc-filesystem

provisioner: rbd.csi.ceph.com

parameters:

clusterID: 10594fb3-68f3-4c97-8e0b-df80ba2a6745

imageFeatures: layering

pool: kubernetes

csi.storage.k8s.io/provisioner-secret-name: csi-rbd-secret

csi.storage.k8s.io/provisioner-secret-namespace: default

csi.storage.k8s.io/node-stage-secret-name: csi-rbd-secret

csi.storage.k8s.io/node-stage-secret-namespace: default

csi.storage.k8s.io/controller-expand-secret-name: csi-rbd-secret

csi.storage.k8s.io/controller-expand-secret-namespace: default

csi.storage.k8s.io/fstype: ext4

reclaimPolicy: Delete

allowVolumeExpansion: true

mountOptions:

- discard

|

其中:

csi.storage.k8s.io/controller-expand-secret-name: csi-rbd-secret

csi.storage.k8s.io/controller-expand-secret-namespace: default

allowVolumeExpansion: true

以上三个参数都是在ceph-csi支持动态扩容时需要具备的参数

创建pvc

1

| $ kubectl apply -f filesystem-pvc2.yaml

|

1

2

3

4

5

6

7

8

9

10

11

12

13

| $ cat filesystem-pvc2.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: filesystem-pvc-2

spec:

accessModes:

- ReadWriteOnce

volumeMode: Filesystem

resources:

requests:

storage: 200Mi

storageClassName: csi-rbd-sc-filesystem

|

这里除了filesystem还可以设置成block,区别就是文件系统是直接挂在文件夹下,block相当于是裸设备,有些场景服务为了性能会直接操作裸磁盘就可以用到了。

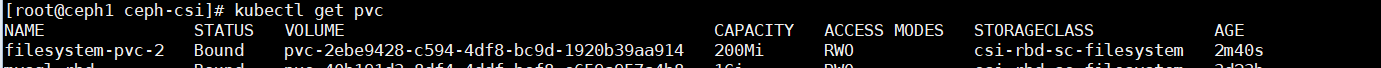

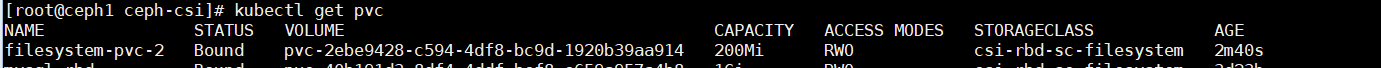

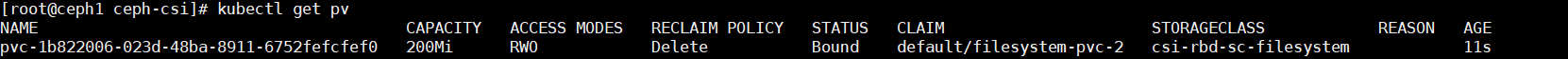

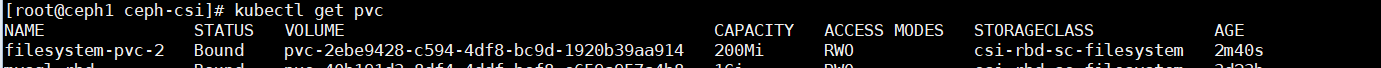

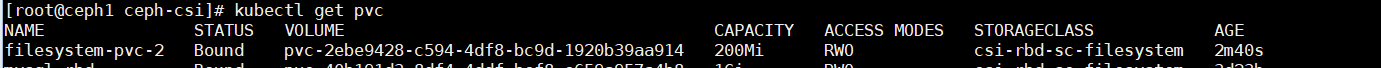

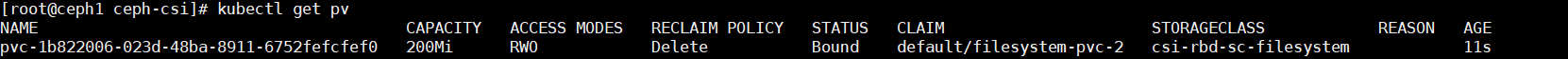

这个时候查看卷就创建出来了。

存储盘扩容测试

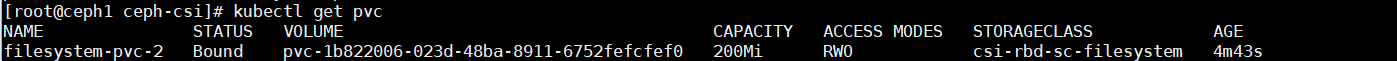

以上面创建出来的200Mi的filesystem-pvc-2卷举例,查看pvc与pv都是200M。

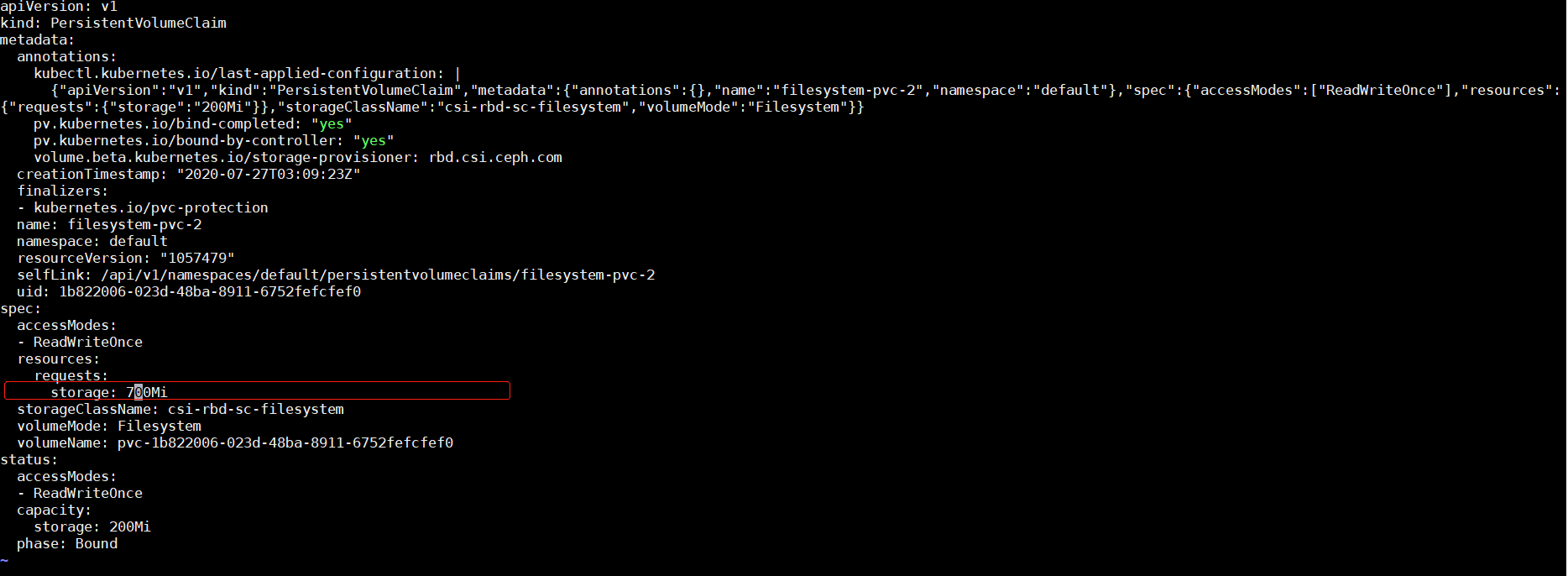

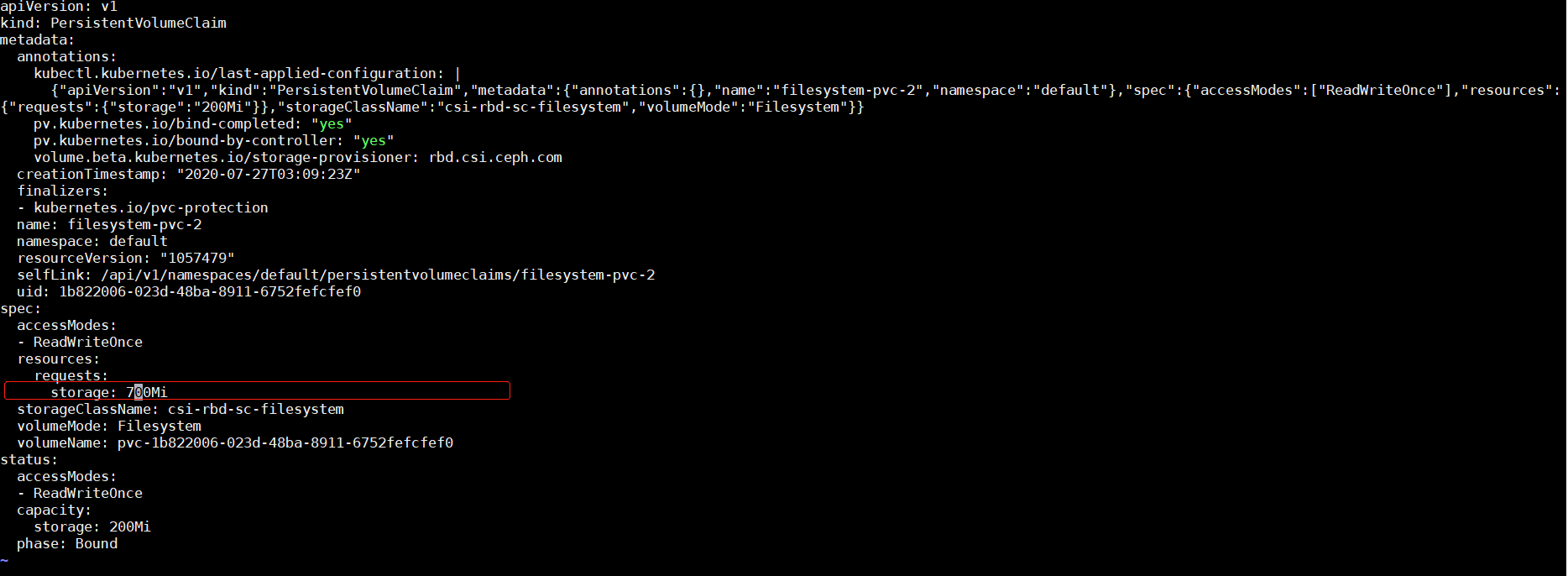

在线修改pvc中的大小,200M修改为700M,保存退出。

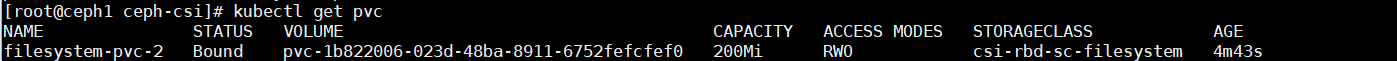

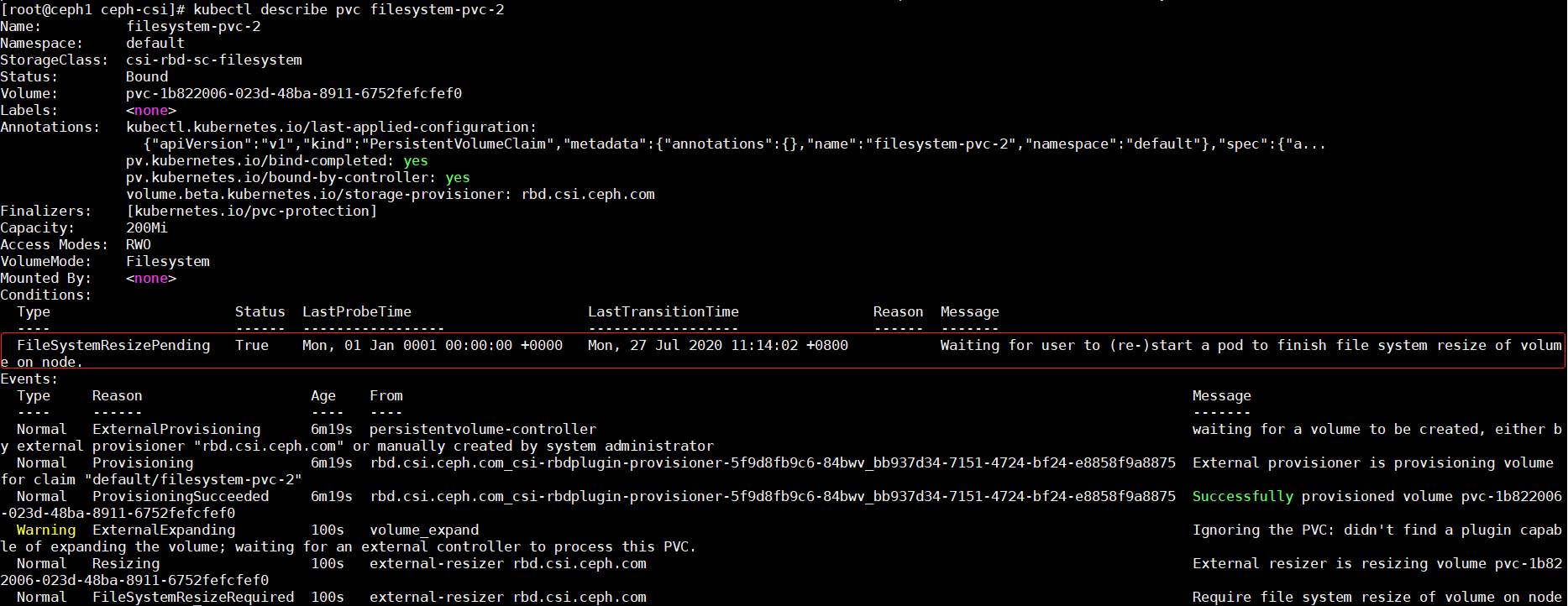

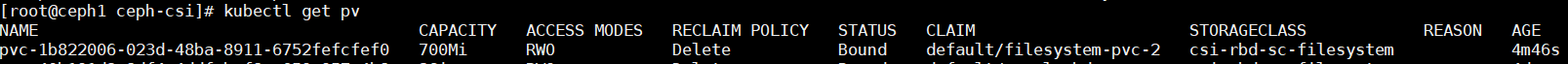

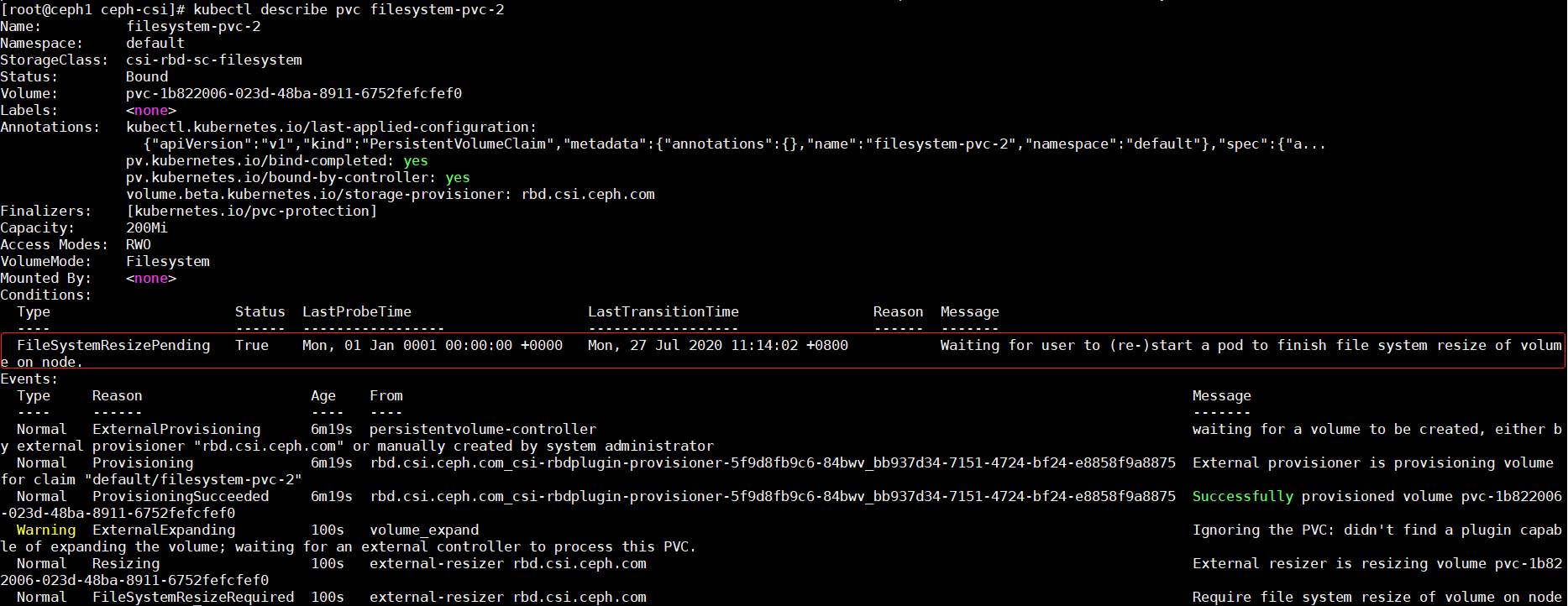

查看此时的pvc与pv状态

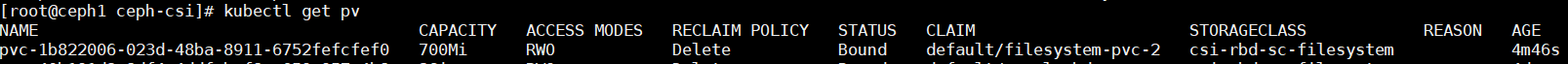

会发现pv已经变为了700M,pvc没有改变,查看pvc的详细信息。

状态栏中已经说的很清楚了,重启文件系统就可以生效了(客户端)。

扩容缺陷

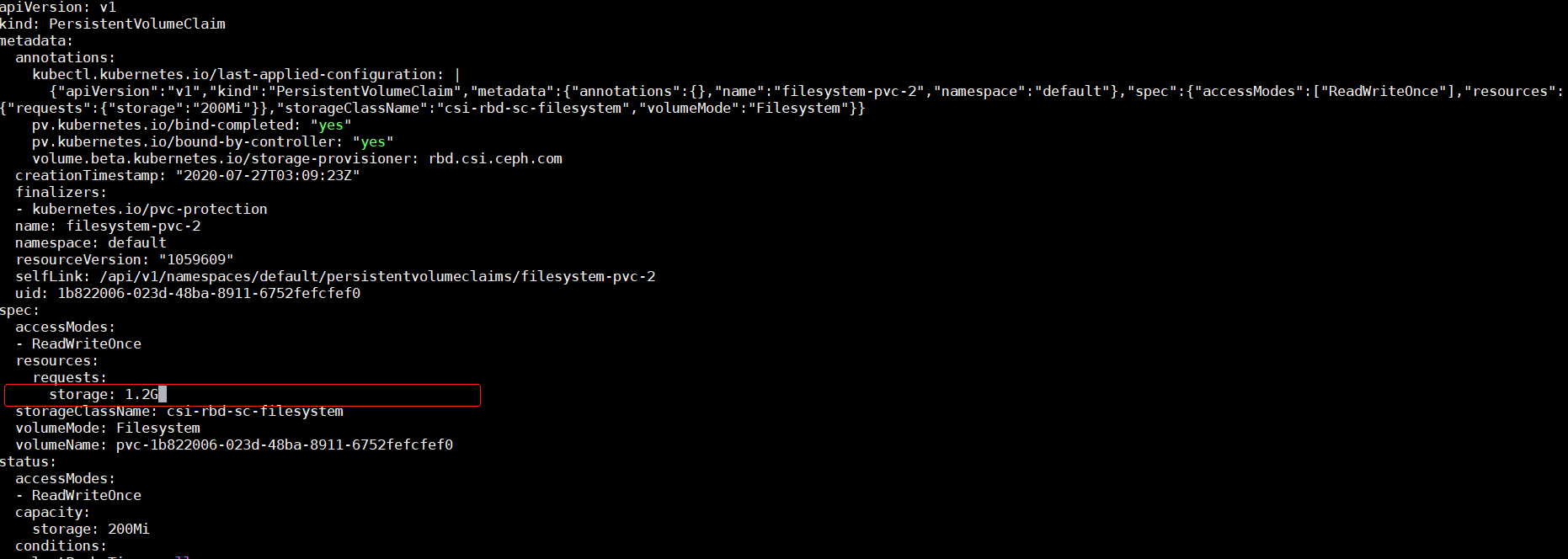

原先的卷空间如果扩容到1G及以下会按照实际申请大小来创建,如果申请扩容大小超出1G会自动以GB为单位补全,如下例子:

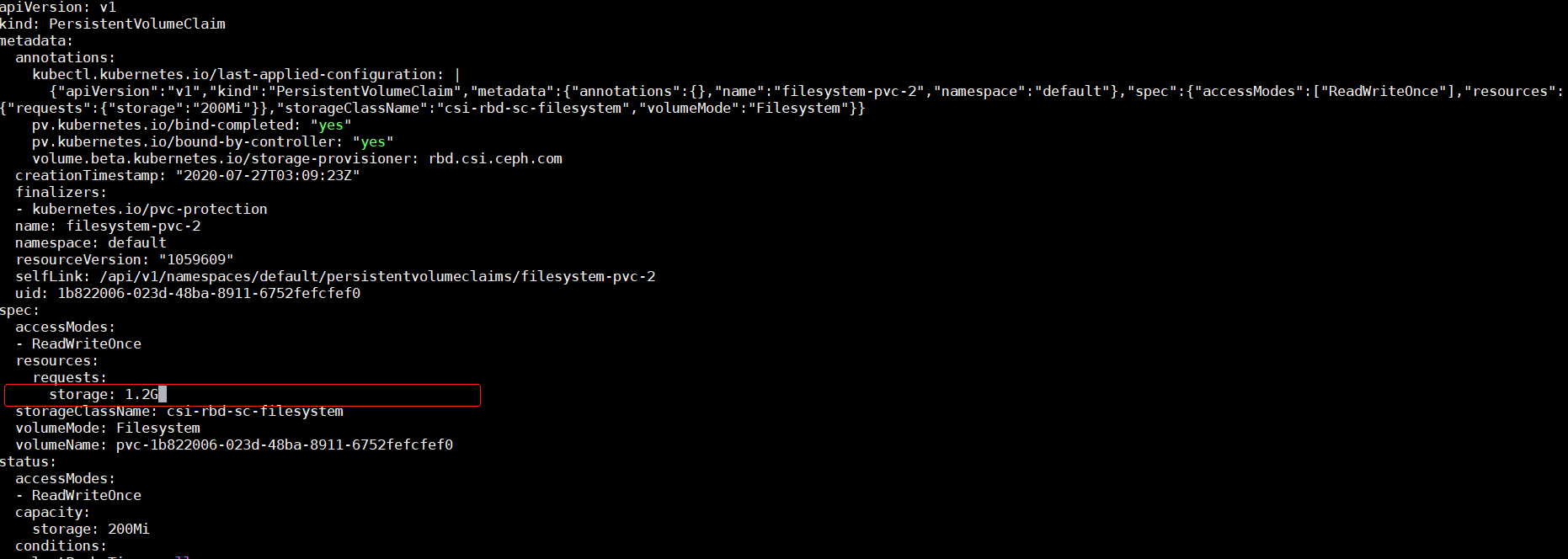

将之前创建的filesystem-pvc-2扩容到1.2G

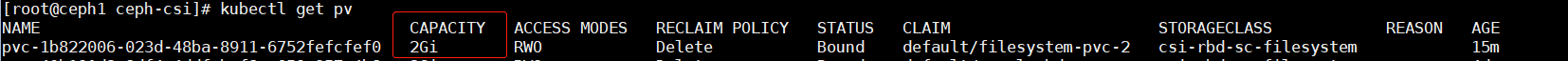

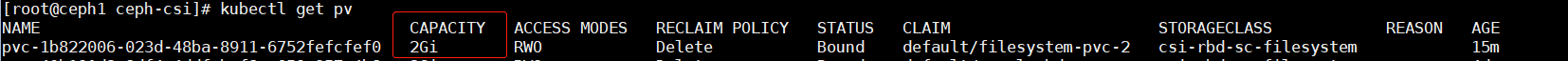

保存退出,查看pv的大小,补为了2G

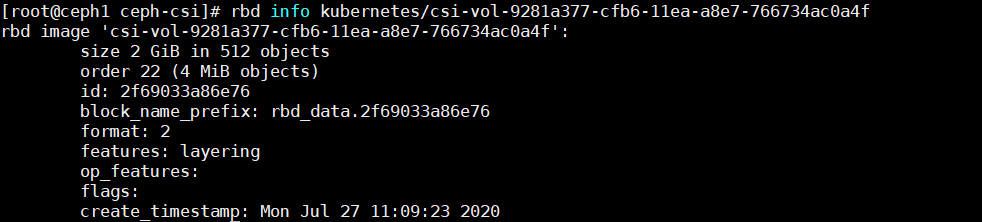

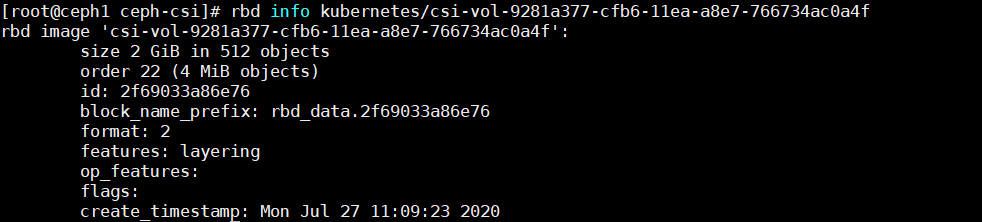

查看ceph端的rbd大小也是2G

参考文档

Kubernetes存储介绍系列 ——CSI plugin设计:http://newto.me/k8s-csi-design/

Kubernetes 兼容 CSI 做的工作: https://www.kubernetes.org.cn/4618.html

kubernetes部署csi: https://docs.ceph.com/docs/master/rbd/rbd-kubernetes/#using-ceph-block-devices