环境描述

主机名 ip地址

系统环境 角色

k8s-1

IP:192.168.186.10

cpu:x2 mem:2GB disk:/dev/sdb 40GB

K8s_master,Gluster_master,Heketi_master

K8s-2

IP:192.168.186.11

cpu:x2 mem:2GB disk:/dev/sdb 40GB

K8s_node,Gluster_node

K8s-3

IP:192.168.186.12

cpu:x2 mem:2GB disk:/dev/sdb 40GB

K8s_master,Gluster_node

集群扩容 添加新磁盘

添加设备时,请记住将设备添加为一组。例如,如果创建的卷使用副本为2,则应将device添加到两个节点(每个节点一个device)。如果使用副本3,则将device添加到三个节点。

命令行方式 假设在k8s-3上增加磁盘,查看k8s-3部署的pod name及IP:

1 2 3 4 5 [root@k8s-1 ~]# kubectl get po -o wide -l glusterfs-node NAME READY STATUS RESTARTS AGE IP NODE glusterfs-5npwn 1/1 Running 0 20h 192.168.186.10 k8s-1 glusterfs-8zfzq 1/1 Running 0 20h 192.168.186.11 k8s-2 glusterfs-bd5dx 1/1 Running 0 20h 192.168.186.12 k8s-3

在k8s-3上确认新添加的盘符:

1 2 3 4 Disk /dev/sdc: 42.9 GB, 42949672960 bytes, 83886080 sectors Units = sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes

使用heketi-cli查看cluster ID和所有node ID:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 [root@k8s-1 ~]# heketi-cli cluster list Clusters: Id:5dec5676c731498c2bdf996e110a3e5e [file][block] [root@k8s-1 ~]# heketi-cli cluster info 5dec5676c731498c2bdf996e110a3e5e Cluster id: 5dec5676c731498c2bdf996e110a3e5e Nodes: 0f00835397868d3591f45432e432ba38 d38819746cab7d567ba5f5f4fea45d91 fb181b0cef571e9af7d84d2ecf534585 Volumes: 32146a51be9f980c14bc86c34f67ebd5 56d636b452d31a9d4cb523d752ad0891 828dc2dfaa00b7213e831b91c6213ae4 b9c68075c6f20438b46db892d15ed45a Block: true File: true

找到对应的k8s-3的node ID:

1 2 3 4 5 6 7 8 9 [root@k8s-1 ~]# heketi-cli node info 0f00835397868d3591f45432e432ba38 Node Id: 0f00835397868d3591f45432e432ba38 State: online Cluster Id: 5dec5676c731498c2bdf996e110a3e5e Zone: 1 Management Hostname: k8s-node02 Storage Hostname: 192.168.186.12 Devices: Id:82af8e5f2fb2e1396f7c9e9f7698a178 Name:/dev/sdb State:online Size (GiB):39 Used (GiB):25 Free (GiB):14 Bricks:4

添加磁盘至GFS集群的k8s-3:

1 2 [root@k8s-1 ~]# heketi-cli device add --name=/dev/sdc --node=0f00835397868d3591f45432e432ba38 Device added successfully

查看结果:

1 2 3 4 5 6 7 8 9 10 [root@k8s-1 ~]# heketi-cli node info 0f00835397868d3591f45432e432ba38 Node Id: 0f00835397868d3591f45432e432ba38 State: online Cluster Id: 5dec5676c731498c2bdf996e110a3e5e Zone: 1 Management Hostname: k8s-3 Storage Hostname: 192.168.186.12 Devices: Id:5539e74bc2955e7c70b3a20e72c04615 Name:/dev/sdc State:online Size (GiB):39 Used (GiB):0 Free (GiB):39 Bricks:0 Id:82af8e5f2fb2e1396f7c9e9f7698a178 Name:/dev/sdb State:online Size (GiB):39 Used (GiB):25 Free (GiB):14 Bricks:4

拓扑文件方式 当一次添加多个设备的一种更简单的方法是将新设备添加到用于设置群集的拓扑文件(topology.json)中的节点描述中。然后重新运行该命令以加载新拓扑。下面是我们向节点添加新的/dev/sdc磁盘的示例:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 cat >topology.json<<EOF { "clusters" : [ { "nodes" : [ { "node" : { "hostnames" : { "manage" : [ "k8s-1" ], "storage" : [ "192.168.186.10" ] }, "zone" : 1 }, "devices" : [ "/dev/sdb" , "/dev/sdc" ] }, { "node" : { "hostnames" : { "manage" : [ "k8s-2" ], "storage" : [ "192.168.186.11" ] }, "zone" : 1 }, "devices" : [ "/dev/sdb" , "/dev/sdc" ] }, { "node" : { "hostnames" : { "manage" : [ "k8s-3" ], "storage" : [ "192.168.186.12" ] }, "zone" : 1 }, "devices" : [ "/dev/sdb" , "/dev/sdc" ] } ] } ] } EOF

heketi加载拓扑配置:

1 2 3 4 5 6 7 8 9 10 11 12 13 # heketi-cli topology load --json=topology.json Creating cluster ... ID: 224a5a6555fa5c0c930691111c63e863 Allowing file volumes on cluster. Allowing block volumes on cluster. Creating node 192.168.186.10 ... ID: 7946b917b91a579c619ba51d9129aeb0 Found device /dev/sdb Adding device /dev/sdc ... OK Creating node 192.168.186.11 ... ID: 5d10e593e89c7c61f8712964387f959c Found device /dev/sdb Adding device /dev/sdc ... OK Creating node 192.168.186.12 ... ID: de620cb2c313a5461d5e0a6ae234c553 Found device /dev/sdb Adding device /dev/sdc ... OK

添加新节点 假设将k8s-4,IP为192.168.186.13的加入glusterfs集群,并将该节点的/dev/sdb,/dev/sdc加入到集群。

先给node加标签,之后会自动创建pod:

1 2 3 4 5 6 7 8 9 [root@k8s-1 kubernetes]# kubectl label node k8s-4 storagenode=glusterfs node/k8s-4 labeled [root@k8s-1 kubernetes]# kubectl get pod -o wide -l glusterfs-node NAME READY STATUS RESTARTS AGE IP NODE glusterfs-5npwn 1/1 Running 0 21h 192.168.186.11 k8s-2 glusterfs-8zfzq 1/1 Running 0 21h 192.168.186.10 k8s-1 glusterfs-96w74 0/1 ContainerCreating 0 2m 192.168.186.13 k8s-4 glusterfs-bd5dx 1/1 Running 0 21h 192.168.186.12 k8s-3

进入任意节点的gfs服务容器执行peer probe,加入新节点:

1 2 [root@k8s-1 kubernetes]# kubectl exec -ti glusterfs-5npwn -- gluster peer probe 192.168.186.13 peer probe: success.

将新节点纳入heketi数据库统一管理:

1 2 3 4 5 6 7 8 9 10 11 12 [root@k8s-1 kubernetes]# heketi-cli cluster list Clusters: Id:5dec5676c731498c2bdf996e110a3e5e [file][block] [root@k8s-1 kubernetes]# heketi-cli node add --zone=1 --cluster=5dec5676c731498c2bdf996e110a3e5e --management-host-name=k8s-4 --storage-host-name=192.168.186.13 Node information: Id: 150bc8c458a70310c6137e840619758c State: online Cluster Id: 5dec5676c731498c2bdf996e110a3e5e Zone: 1 Management Hostname k8s-4 Storage Hostname 192.168.186.13

将新节点的磁盘加入到集群中,参考上面的两种方式之一即可。

存储卷扩容 扩容 扩容volume可使用命令(单位为G):

1 # heketi-cli volume expand --volume=volumeID --expand-size=10

集群缩容 Heketi也支持降低存储容量。这可以通过删除device,节点和集群来实现。可以使用API或使用heketi-cli执行这些更改。

heketi删除device的前提是device没有被使用(Used为0)

以下是如何从Heketi删除没有device被使用的命令:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 # heketi-cli topology info Cluster Id: 6fe4dcffb9e077007db17f737ed999fe Volumes: Nodes: Node Id: 61d019bb0f717e04ecddfefa5555bc41 State: online Cluster Id: 6fe4dcffb9e077007db17f737ed999fe Zone: 1 Management Hostname: k8s-3 Storage Hostname: 192.168.186.12 Devices: Id:e4805400ffa45d6da503da19b26baad6 Name:/dev/sdb State:online Size (GiB):40 Used (GiB):0 Free (GiB):40 Bricks: Id:ecc3c65e4d22abf3980deba4ae90238c Name:/dev/sdc State:online Size (GiB):40 Used (GiB):0 Free (GiB):40 Bricks: Node Id: e97d77d0191c26089376c78202ee2f20 State: online Cluster Id: 6fe4dcffb9e077007db17f737ed999fe Zone: 2 Management Hostname: k8s-4 Storage Hostname: 192.168.186.13 Devices: Id:3dc3b3f0dfd749e8dc4ee98ed2cc4141 Name:/dev/sdb State:online Size (GiB):40 Used (GiB):0 Free (GiB):40 Bricks: Id:4122bdbbe28017944a44e42b06755b1c Name:/dev/sdc State:online Size (GiB):40 Used (GiB):0 Free (GiB)40 Bricks: # d=`heketi-cli topology info | grep Size | awk '{print $1}' | cut -d: -f 2` # for i in $d ; do > heketi-cli device delete $i > done Device e4805400ffa45d6da503da19b26baad6 deleted Device ecc3c65e4d22abf3980deba4ae90238c deleted Device 3dc3b3f0dfd749e8dc4ee98ed2cc4141 deleted Device 4122bdbbe28017944a44e42b06755b1c deleted # heketi-cli node delete $node1 Node 61d019bb0f717e04ecddfefa5555bc41 deleted # heketi-cli node delete $node2 Node e97d77d0191c26089376c78202ee2f20 deleted # heketi-cli cluster delete $cluster Cluster 6fe4dcffb9e077007db17f737ed999fe deleted

可用性测试 添加节点 通过restful api添加一台glusterfs主机,可以正常使用,前提是在添加之前要在新节点安装好glusterfs和lvm,加载 dm_thin_pool 模块,开启相关端口,给新节点打glusterfs的tag(daemonset用),集群内节点可以互相解析域名。

关闭节点 测试高可用中的坑。

三个glusterfs节点,关闭一台,客户端可读可写。

三个glusterfs节点,关闭两台,客户端可读不可写。

关闭虚机后发现heketi中还是显示节点在线,bricks不会同步到新增的虚机上,尝试把那台关了的机器剔除,发现bricks同步到了新增的节点。具体操作如下:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 [root@redhat1 /]# heketi-cli -s http://redhat1:30080 node list Id:05ac57499e3fbc0f8ec5a3301fac92c7 Cluster:43c28e6f4b4e05f58ebd3b6f158982cd Id:5e10a4c264a2fc13ecdf8d2482ba7281 Cluster:43c28e6f4b4e05f58ebd3b6f158982cd Id:b1e3ba52f6e8c82e8b3e512798348876 Cluster:43c28e6f4b4e05f58ebd3b6f158982cd Id:f1bd8cd0f52b2d91b13e79799a34c2ed Cluster:43c28e6f4b4e05f58ebd3b6f158982cd f1bd8cd0f52b2d91b13e79799a34c2ed为已关闭的节点 5e10a4c264a2fc13ecdf8d2482ba7281为新添加的节点 [root@redhat1 /]# heketi-cli -s http://redhat1:30080 node disable f1bd8cd0f52b2d91b13e79799a34c2ed Node f1bd8cd0f52b2d91b13e79799a34c2ed is now offline [root@redhat1 /]#heketi-cli-s http://redhat1:30080 node remove f1bd8cd0f52b2d91b13e79799a34c2ed Node f1bd8cd0f52b2d91b13e79799a34c2ed is now removed [root@redhat1 /]#heketi-cli -s http://redhat1:30080 device delete ff257d2350f05f7f5ebaa2853e5815e8 Error: Failed to delete device /dev/sdb with id ff257d2350f05f7f5ebaa2853e5815e8 on host redhat3: error dialing backend: dial tcp 192.168.186.12:10250: connect: no route to host [root@redhat1 /]# heketi-cli -s http://redhat1:30080 node delete f1bd8cd0f52b2d91b13e79799a34c2ed Error: Unable to delete node [f1bd8cd0f52b2d91b13e79799a34c2ed] because it contains devices

报错是因为关机了连不上节点,此时再查看关闭的哪个节点上bricks已经没了,同步到了新增加的节点上。

在旧节点从群集中完全清除之前,新增的节点不能和原先关闭的节点共用同样的标识。

硬盘损坏 本地三台gfs节点,每台挂载一块裸盘,把其中一块盘给删除模拟磁盘损坏,此时heketi中还能看到device,并且正在使用,登录那台节点执行partprobe更新下磁盘后发现lv和vg没有了,pv会存在残留数据,重启此节点后残留数据消失,heketi端还显示device正在使用。

手动删除device报以下错误

Error: Failed to remove device, error: No Replacement was found for resource

此错误是因为存储设备当前没有达到副本数要求的三个。

解决方案:

添加device后删除原先的device即可。

brick损坏 手动删除gfs节点的一个brick:

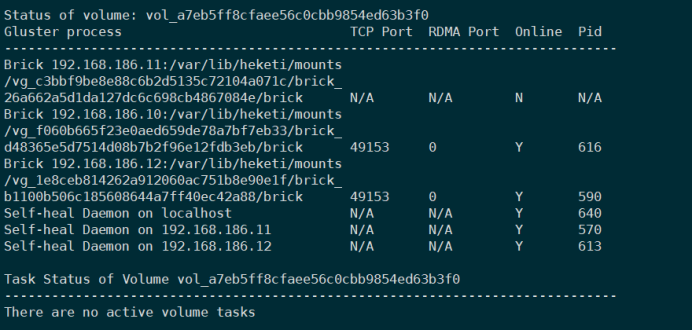

查看当前volume的状态,此时brick显示离线:

解决方案:

先从volume端删除此brick

1 # gluster volume remove-brick <volume-name> replica <count> force

replica 2参数,开始我们创建卷时复制数是3,现在变为2。

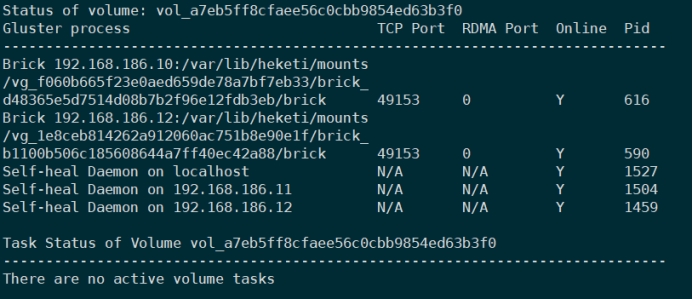

此时查看volume状态,brick已经删除,volume变为2副本

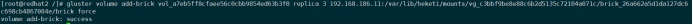

重新添加回此brick:

1 # gluster volume add-brick <volume-name> replica <count> force

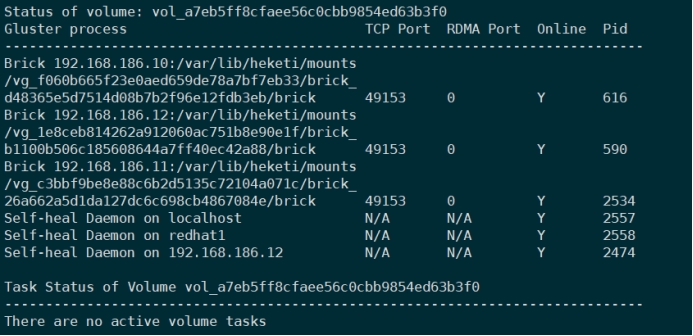

查看volume状态,brick已经添加回来了

查看此brick中数据已恢复。

上述方法是模拟其中一个brick故障,如果此卷可以重启的话可以快速重启尝试恢复。

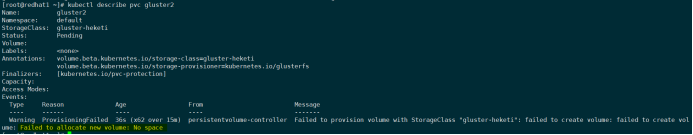

创建大于剩余空间的卷 创建不出来,报错

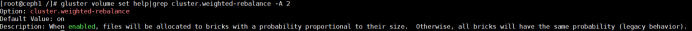

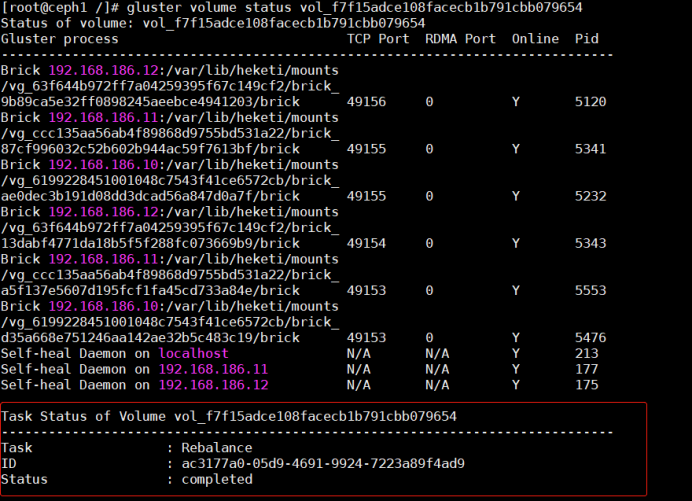

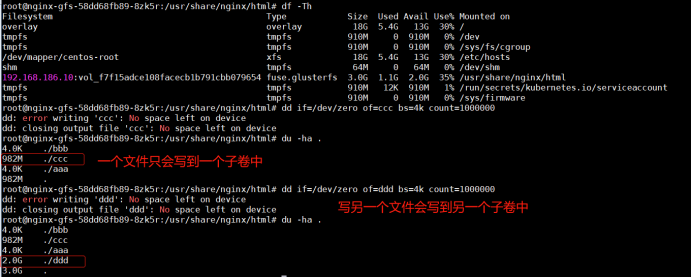

扩容volume 提前申请一个1g大小的pvc并且挂载到应用服务,写一些数据,然后将应用服务停掉,扩容pvc到2G大小,再把应用服务开启,测试写,因为扩容volume想当于是在原先子卷的情况下又加了一个子卷,但是就算是新加入的子卷有剩余空间,glusterfs的hash寻址机制也会一直读写老的子卷(找之前的文件hash值),尝试给卷做rebalance操作来触发数据均衡操作,(扩容后会自动进行rebalance),没有效果。

创建存储盘使应提前预估好使用量大小。