Glusterfs提供底层存储功能,heketi为glusterfs提供restful风格的api,Heketi要求在每个glusterfs节点上配备裸磁盘 ,目前heketi仅支持使用裸磁盘(未格式化)添加为device,不支持文件系统,因为Heketi要用来创建PV和VG方便管理glusterfs。

集群托管于heketi后,不能使用命令管理存储卷,以免与Heketi数据库中存储的信息不一致。

glusterfs支持k8s的pv的3种访问模式ReadWriteOnce,ReadOnlyMany ,ReadWriteMany。访问模式只是能力描述,并不是强制执行的,对于没有按pvc声明的方式使用pv,存储提供者应该负责访问时的运行错误。例如:如果设置pvc的访问模式为ReadOnlyMany ,pod挂载后依然可写,如果需要真正的不可写,申请pvc是需要指定 readOnly: true 参数。

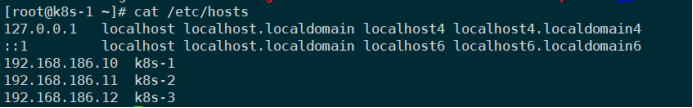

环境描述

主机名 ip地址

系统环境 角色

k8s-1

192.168.186.10

cpu:x2 mem:2GB disk:/dev/sdb 10GB

K8s_master,Gluster_master,Heketi_master

K8s-2

192.168.186.11

cpu:x2 mem:2GB disk:/dev/sdb 10GB

K8s_node,Gluster_node

K8s-3

192.168.186.12

cpu:x2 mem:2GB disk:/dev/sdb 10GB

K8s_node,Gluster_node

如果存在iptable限制,需执行以下命令开通以下port

1 2 3 4 5 6 iptables -N heketi iptables -A heketi -p tcp -m state --state NEW -m tcp --dport 24007 -j ACCEPT iptables -A heketi -p tcp -m state --state NEW -m tcp --dport 24008 -j ACCEPT iptables -A heketi -p tcp -m state --state NEW -m tcp --dport 2222 -j ACCEPT iptables -A heketi -p tcp -m state --state NEW -m multiport --dports 49152:49251 -j ACCEPT service iptables save

安装步骤 下面的测试是采用容器化方式部署GFS,GFS以Daemonset的方式进行部署,保证每台需要部署GFS管理服务的Node上都运行一个GFS管理服务。

三台节点执行: 要求所有node节点存在主机的解析记录,务必配置好/etc/hosts

安装 glusterfs 每节点需要提前加载 dm_thin_pool 模块:

1 2 3 # modprobe dm_thin_pool # modprobe dm_snapshot # modprobe dm_mirror

配置开启自加载:

1 2 3 4 5 # cat >/etc/modules-load.d/glusterfs.conf<<EOF dm_thin_pool dm_snapshot dm_mirror EOF

安装 glusterfs-fuse:

1 # yum install -y glusterfs-fuse lvm2

第一台节点执行: 安装glusterfs与heketi 安装 heketi client

https://github.com/heketi/heketi/releases

去github下载相关的版本:

1 2 3 [root@k8s-1 ~]# wget https://github.com/heketi/heketi/releases/download/v8.0.0/heketi-client-v9.0.0.linux.amd64.tar.gz [root@k8s-1 ~]# tar xf heketi-client-v9.0.0.linux.amd64.tar.gz [root@k8s-1 ~]# cp heketi-client/bin/heketi-cli /usr/local/bin

查看版本:

1 [root@k8s-1 ~]# heketi-cli -v

之后部署步骤都在如下目录执行:

1 [root@k8s-1 ~]#cd heketi-client/share/heketi/kubernetes

在k8s中部署 glusterfs:

1 [root@k8s-1 kubernetes]# kubectl create -f glusterfs-daemonset.json

此时采用的为默认的挂载方式,可使用其他磁盘当做GFS的工作目录

此时创建的namespace为默认的default,按需更改

给提供存储 node 节点打 label:

1 [root@k8s-1 kubernetes]# kubectl label node k8s-1 k8s-2 k8s-3 storagenode=glusterfs

查看 glusterfs 状态:

1 [root@k8s-1 kubernetes]# kubectl get pods -o wide

部署 heketi server #配置 heketi server 的权限:

1 2 [root@k8s-1 kubernetes]# kubectl create -f heketi-service-account.json [root@k8s-1 kubernetes]# kubectl create clusterrolebinding heketi-gluster-admin --clusterrole=edit --serviceaccount=default:heketi-service-account

创建 cofig secret:

1 [root@k8s-1 kubernetes]# kubectl create secret generic heketi-config-secret --from-file=./heketi.json

初始化部署:

1 [root@k8s-1 kubernetes]# kubectl create -f heketi-bootstrap.json

# 查看 heketi bootstrap 状态

1 2 3 [root@k8s-1 kubernetes]# kubectl get pods -o wide [root@k8s-1 kubernetes]# kubectl get svc

# 配置端口转发 heketi server

1 2 3 [root@k8s-1 kubernetes]# HEKETI_BOOTSTRAP_POD=$(kubectl get pods | grep deploy-heketi | awk '{print $1}') [root@k8s-1 kubernetes]# kubectl port-forward $HEKETI_BOOTSTRAP_POD 58080:8080

# 测试访问,另起一终端

1 [root@k8s-1 ~]#curl http://localhost:58080/hello

配置 glusterfs

hostnames/manage 字段里必须和 kubectl get node 一致

hostnames/storage 指定存储网络 ip 本次实验使用与k8s集群同一个ip

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 [root@k8s-1 kubernetes]# cat >topology.json<<EOF { "clusters" : [ { "nodes" : [ { "node" : { "hostnames" : { "manage" : [ "k8s-1" ], "storage" : [ "192.168.186.10" ] }, "zone" : 1 }, "devices" : [ "/dev/sdb" ] }, { "node" : { "hostnames" : { "manage" : [ "k8s-2" ], "storage" : [ "192.168.186.11" ] }, "zone" : 1 }, "devices" : [ "/dev/sdb" ] }, { "node" : { "hostnames" : { "manage" : [ "k8s-3" ], "storage" : [ "192.168.186.12" ] }, "zone" : 1 }, "devices" : [ "/dev/sdb" ] } ] } ] } EOF

heketi加载配置:

1 2 3 [root@k8s-1 kubernetes]# export HEKETI_CLI_SERVER=http://localhost:58080 [root@k8s-1 kubernetes]# heketi-cli topology load --json=topology.json

使用 Heketi 创建一个用于存储 Heketi 数据库的 volume:

1 2 [root@k8s-1 kubernetes]# heketi-cli setup-openshift-heketi-storage [root@k8s-1 kubernetes]# kubectl create -f heketi-storage.json

heketi-storage.json中:

创建了heketi-storage-endpoints,(指明了gfs地址和端口,默认端口为1)创建了heketi-storage-copy-job,此job的作用就是复制heketi中的数据文件到 /heketi,而/heketi目录挂载在了卷heketi-storage中,而heketi-storage volume是前面执行”heketi-cli setup-openshift-heketi-storage”时创建好了的。

查看状态,等所有job完成 即状态为 Completed,才能进行如下的步骤:

1 2 [root@k8s-1 kubernetes]# kubectl get pods [root@k8s-1 kubernetes]# kubectl get job

删除部署时产生的相关资源:

1 [root@k8s-1 kubernetes]# kubectl delete all,service,jobs,deployment,secret --selector="deploy-heketi"

# 部署 heketi server

1 [root@k8s-1 kubernetes]# kubectl create -f heketi-deployment.json

# 查看 heketi server 状态

1 2 [root@k8s-1 kubernetes]# kubectl get pods -o wide [root@k8s-1 kubernetes]# kubectl get svc

# 查看 heketi 状态信息, 配置端口转发 heketi server

1 2 3 4 5 [root@k8s-1 kubernetes]# HEKETI_BOOTSTRAP_POD=$(kubectl get pods | grep heketi | awk '{print $1}') [root@k8s-1 kubernetes]# kubectl port-forward $HEKETI_BOOTSTRAP_POD 58080:8080 [root@k8s-1 kubernetes]# export HEKETI_CLI_SERVER=http://localhost:58080 [root@k8s-1 kubernetes]# heketi-cli cluster list [root@k8s-1 kubernetes]# heketi-cli volume list

可以把heketi的service type换成NodePrort,并给glusterfs的daemonset添加spec. template.spec.hostNetwork: true,之后就不用以端口转发映射本地端口的方式访问heketi,直接heketi-cli -s srv:port 即可

创建 StorageClass 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 [root@k8s-1 kubernetes]# HEKETI_SERVER=$(kubectl get svc | grep heketi | head -1 | awk '{print $3}') [root@k8s-1 kubernetes]# echo $HEKETI_SERVER [root@k8s-1 kubernetes]# cat >storageclass-glusterfs.yaml<<EOF kind: StorageClass apiVersion: storage.k8s.io/v1 metadata: name: gluster-heketi provisioner: kubernetes.io/glusterfs # reclaimPolicy: Retain parameters: resturl: "http://$HEKETI_SERVER:8080" gidMin: "40000" gidMax: "50000" volumetype: "replicate:3" # 允许对pvc扩容 allowVolumeExpansion: true EOF [root@k8s-1 kubernetes]# kubectl create -f storageclass-glusterfs.yaml

以上创建了一个含有三个副本的gluster的存储类型(storage-class)

volumetype中的relicate必须大于1,否则创建pvc的时候会报错

在这里创建的storageclass显示指定reclaimPolicy为Retain(默认情况下是Delete),删除pvc后pv以及后端的volume、brick(lvm)不会被删除。

创建pvc 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 [root@k8s-1 kubernetes]# cat >gluster-pvc-test.yaml<<EOF apiVersion: v1 kind: PersistentVolumeClaim metadata: name: gluster1 annotations: volume.beta.kubernetes.io/storage-class: gluster-heketi spec: accessModes: - ReadWriteMany resources: requests: storage: 1Gi EOF [root@k8s-1 kubernetes]# kubectl apply -f gluster-pvc-test.yaml

查看卷状态

1 2 [root@k8s-1 kubernetes]# kubectl get pvc [root@k8s-1 kubernetes]# kubectl get pv

创建服务挂载测试 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 [root@k8s-1 kubernetes ] apiVersion: extensions/v1beta1 kind: Deployment metadata: name: nginx-gfs labels: name: nginx-gfs spec: replicas: 2 selector: matchLabels: name: nginx-gfs template: metadata: labels: name: nginx-gfs spec: containers: - name: nginx-gfs image: nginx ports: - name: web containerPort: 80 volumeMounts: - name: gfs mountPath: /usr/share/nginx/html volumes: - name: gfs persistentVolumeClaim: claimName: gluster1 EOF [root@k8s-1 kubernetes ]

查看服务是否正常启动:

1 [root@k8s-1 kubernetes]# kubectl get pods -o wide

测试pvc的扩容 修改pvc/gluster1容量1G改为2G,过一会儿会自动生效,此时查看pv,pvc,和进入容器都已经成了2G(自己机器上测试发现生效时长大概为1min),把容器停掉继续扩容发现也是ok的。

分析篇 heketi是怎么对磁盘进行操作的 回过头来分析下heketi加载gfs配置时进行了什么操作

1 2 3 4 5 6 7 8 9 10 $ heketi-cli topology load --json=topology-sample.json Creating cluster ... ID: 224a5a6555fa5c0c930691111c63e863 Allowing file volumes on cluster. Allowing block volumes on cluster. Creating node 192.168.186.10 ... ID: 7946b917b91a579c619ba51d9129aeb0 Adding device /dev/sdb ... OK Creating node 192.168.186.11 ... ID: 5d10e593e89c7c61f8712964387f959c Adding device /dev/sdb ... OK Creating node 192.168.186.12 ... ID: de620cb2c313a5461d5e0a6ae234c553 Adding device /dev/sdb ... OK

进入任意glusterfs Pod内,执行gluster peer status 发现都已把对端加入到了可信存储池(TSP)中。

在运行了gluster Pod的节点上,自动创建了一个VG,此VG正是由topology-sample.json 文件中的磁盘裸设备创建而来。

一块磁盘设备创建出一个VG,以后创建的PVC,即从此VG里划分的LV。

heketi-cli topology info 查看拓扑结构,显示出每个磁盘设备的ID,对应VG的ID,总空间、已用空间、空余空间等信息。

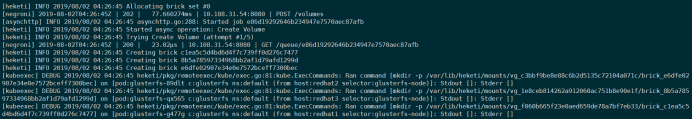

heketi创建db volume的流程 执行heketi-cli setup-openshift-heketi-storage并观测heketi后台做了什么,可以通过相应日志查看:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 [negroni] 2020-03-06T16:45:13Z | 200 | 61.841µs | 192.168.186.10:30080 | GET /clusters [negroni] 2020-03-06T16:45:13Z | 200 | 159.901µs | 192.168.186.10:30080 | GET /clusters/6749ed08e37290fbb1cc4c881872054d [heketi] INFO 2020/03/06 16:45:13 Allocating brick set #0 [negroni] 2020-03-06T16:45:13Z | 202 | 46.293298ms | 192.168.186.10:30080 | POST /volumes [asynchttp] INFO 2020/03/06 16:45:13 asynchttp.go:288: Started job 5ffdc4ab574897e19511ae43afa7e78c [heketi] INFO 2020/03/06 16:45:13 Started async operation: Create Volume [heketi] INFO 2020/03/06 16:45:13 Trying Create Volume (attempt #1/5) [heketi] INFO 2020/03/06 16:45:13 Creating brick b6411ccff63daf1270bc9f354ca484dd [heketi] INFO 2020/03/06 16:45:13 Creating brick 98700f7b0bce70eb29279fb275763704 [heketi] INFO 2020/03/06 16:45:13 Creating brick 777c447835963ef4db7cbb2392c85e59 [negroni] 2020-03-06T16:45:13Z | 200 | 41.375µs | 192.168.186.10:30080 | GET /queue/5ffdc4ab574897e19511ae43afa7e78c [kubeexec] DEBUG 2020/03/06 16:45:13 heketi/pkg/remoteexec/kube/exec.go:81:kube.ExecCommands: Ran command [mkdir -p /var/lib/heketi/mounts/vg_ccc135aa56ab4f89868d9755bd531a22/brick_b6411ccff63daf1270bc9f354ca484dd] on [pod:glusterfs-77ghn c:glusterfs ns:glusterfs (from host:ceph2 selector:glusterfs-node)]: Stdout []: Stderr [] [kubeexec] DEBUG 2020/03/06 16:45:13 heketi/pkg/remoteexec/kube/exec.go:81:kube.ExecCommands: Ran command [mkdir -p /var/lib/heketi/mounts/vg_63f644b972ff7a04259395f67c149cf2/brick_777c447835963ef4db7cbb2392c85e59] on [pod:glusterfs-mhsgb c:glusterfs ns:glusterfs (from host:ceph3 selector:glusterfs-node)]: Stdout []: Stderr [] [kubeexec] DEBUG 2020/03/06 16:45:13 heketi/pkg/remoteexec/kube/exec.go:81:kube.ExecCommands: Ran command [mkdir -p /var/lib/heketi/mounts/vg_6199228451001048c7543f41ce6572cb/brick_98700f7b0bce70eb29279fb275763704] on [pod:glusterfs-fflbn c:glusterfs ns:glusterfs (from host:ceph1 selector:glusterfs-node)]: Stdout []: Stderr [] [kubeexec] DEBUG 2020/03/06 16:45:13 heketi/pkg/remoteexec/kube/exec.go:81:kube.ExecCommands: Ran command [lvcreate -qq --autobackup=n --poolmetadatasize 12288K --chunksize 256K --size 2097152K --thin vg_ccc135aa56ab4f89868d9755bd531a22/tp_76e0f4c09fbd75bcd2bfae166fb8a73d --virtualsize 2097152K --name brick_b6411ccff63daf1270bc9f354ca484dd] on [pod:glusterfs-77ghn c:glusterfs ns:glusterfs (from host:ceph2 selector:glusterfs-node)]: Stdout []: Stderr [ WARNING: This metadata update is NOT backed up. ] [kubeexec] DEBUG 2020/03/06 16:45:14 heketi/pkg/remoteexec/kube/exec.go:81:kube.ExecCommands: Ran command [lvcreate -qq --autobackup=n --poolmetadatasize 12288K --chunksize 256K --size 2097152K --thin vg_63f644b972ff7a04259395f67c149cf2/tp_777c447835963ef4db7cbb2392c85e59 --virtualsize 2097152K --name brick_777c447835963ef4db7cbb2392c85e59] on [pod:glusterfs-mhsgb c:glusterfs ns:glusterfs (from host:ceph3 selector:glusterfs-node)]: Stdout []: Stderr [ WARNING: This metadata update is NOT backed up. ] [kubeexec] DEBUG 2020/03/06 16:45:14 heketi/pkg/remoteexec/kube/exec.go:81:kube.ExecCommands: Ran command [lvcreate -qq --autobackup=n --poolmetadatasize 12288K --chunksize 256K --size 2097152K --thin vg_6199228451001048c7543f41ce6572cb/tp_98700f7b0bce70eb29279fb275763704 --virtualsize 2097152K --name brick_98700f7b0bce70eb29279fb275763704] on [pod:glusterfs-fflbn c:glusterfs ns:glusterfs (from host:ceph1 selector:glusterfs-node)]: Stdout []: Stderr [ WARNING: This metadata update is NOT backed up. ] [kubeexec] DEBUG 2020/03/06 16:45:14 heketi/pkg/remoteexec/kube/exec.go:81:kube.ExecCommands: Ran command [mkfs.xfs -i size=512 -n size=8192 /dev/mapper/vg_ccc135aa56ab4f89868d9755bd531a22-brick_b6411ccff63daf1270bc9f354ca484dd] on [pod:glusterfs-77ghn c:glusterfs ns:glusterfs (from host:ceph2 selector:glusterfs-node)]: Stdout [meta-data=/dev/mapper/vg_ccc135aa56ab4f89868d9755bd531a22-brick_b6411ccff63daf1270bc9f354ca484dd isize=512 agcount=8, agsize=65536 blks = sectsz=512 attr=2, projid32bit=1 = crc=1 finobt=0, sparse=0 data = bsize=4096 blocks=524288, imaxpct=25 = sunit=64 swidth=64 blks naming =version 2 bsize=8192 ascii-ci=0 ftype=1 log =internal log bsize=4096 blocks=2560, version=2 = sectsz=512 sunit=64 blks, lazy-count=1 realtime =none extsz=4096 blocks=0, rtextents=0 ]: Stderr [] [kubeexec] DEBUG 2020/03/06 16:45:14 heketi/pkg/remoteexec/kube/exec.go:81:kube.ExecCommands: Ran command [awk "BEGIN {print \"/dev/mapper/vg_ccc135aa56ab4f89868d9755bd531a22-brick_b6411ccff63daf1270bc9f354ca484dd /var/lib/heketi/mounts/vg_ccc135aa56ab4f89868d9755bd531a22/brick_b6411ccff63daf1270bc9f354ca484dd xfs rw,inode64,noatime,nouuid 1 2\" >> \"/var/lib/heketi/fstab\"}"] on [pod:glusterfs-77ghn c:glusterfs ns:glusterfs (from host:ceph2 selector:glusterfs-node)]: Stdout []: Stderr [] [kubeexec] DEBUG 2020/03/06 16:45:14 heketi/pkg/remoteexec/kube/exec.go:81:kube.ExecCommands: Ran command [mkfs.xfs -i size=512 -n size=8192 /dev/mapper/vg_63f644b972ff7a04259395f67c149cf2-brick_777c447835963ef4db7cbb2392c85e59] on [pod:glusterfs-mhsgb c:glusterfs ns:glusterfs (from host:ceph3 selector:glusterfs-node)]: Stdout [meta-data=/dev/mapper/vg_63f644b972ff7a04259395f67c149cf2-brick_777c447835963ef4db7cbb2392c85e59 isize=512 agcount=8, agsize=65536 blks = sectsz=512 attr=2, projid32bit=1 = crc=1 finobt=0, sparse=0 data = bsize=4096 blocks=524288, imaxpct=25 = sunit=64 swidth=64 blks naming =version 2 bsize=8192 ascii-ci=0 ftype=1 log =internal log bsize=4096 blocks=2560, version=2 = sectsz=512 sunit=64 blks, lazy-count=1 realtime =none extsz=4096 blocks=0, rtextents=0 ]: Stderr [] [negroni] 2020-03-06T16:45:14Z | 200 | 34.302µs | 192.168.186.10:30080 | GET /queue/5ffdc4ab574897e19511ae43afa7e78c [kubeexec] DEBUG 2020/03/06 16:45:14 heketi/pkg/remoteexec/kube/exec.go:81:kube.ExecCommands: Ran command [awk "BEGIN {print \"/dev/mapper/vg_63f644b972ff7a04259395f67c149cf2-brick_777c447835963ef4db7cbb2392c85e59 /var/lib/heketi/mounts/vg_63f644b972ff7a04259395f67c149cf2/brick_777c447835963ef4db7cbb2392c85e59 xfs rw,inode64,noatime,nouuid 1 2\" >> \"/var/lib/heketi/fstab\"}"] on [pod:glusterfs-mhsgb c:glusterfs ns:glusterfs (from host:ceph3 selector:glusterfs-node)]: Stdout []: Stderr [] [kubeexec] DEBUG 2020/03/06 16:45:14 heketi/pkg/remoteexec/kube/exec.go:81:kube.ExecCommands: Ran command [mkfs.xfs -i size=512 -n size=8192 /dev/mapper/vg_6199228451001048c7543f41ce6572cb-brick_98700f7b0bce70eb29279fb275763704] on [pod:glusterfs-fflbn c:glusterfs ns:glusterfs (from host:ceph1 selector:glusterfs-node)]: Stdout [meta-data=/dev/mapper/vg_6199228451001048c7543f41ce6572cb-brick_98700f7b0bce70eb29279fb275763704 isize=512 agcount=8, agsize=65536 blks = sectsz=512 attr=2, projid32bit=1 = crc=1 finobt=0, sparse=0 data = bsize=4096 blocks=524288, imaxpct=25 = sunit=64 swidth=64 blks naming =version 2 bsize=8192 ascii-ci=0 ftype=1 log =internal log bsize=4096 blocks=2560, version=2 = sectsz=512 sunit=64 blks, lazy-count=1 realtime =none extsz=4096 blocks=0, rtextents=0 ]: Stderr [] [kubeexec] DEBUG 2020/03/06 16:45:14 heketi/pkg/remoteexec/kube/exec.go:81:kube.ExecCommands: Ran command [awk "BEGIN {print \"/dev/mapper/vg_6199228451001048c7543f41ce6572cb-brick_98700f7b0bce70eb29279fb275763704 /var/lib/heketi/mounts/vg_6199228451001048c7543f41ce6572cb/brick_98700f7b0bce70eb29279fb275763704 xfs rw,inode64,noatime,nouuid 1 2\" >> \"/var/lib/heketi/fstab\"}"] on [pod:glusterfs-fflbn c:glusterfs ns:glusterfs (from host:ceph1 selector:glusterfs-node)]: Stdout []: Stderr [] [kubeexec] DEBUG 2020/03/06 16:45:14 heketi/pkg/remoteexec/kube/exec.go:81:kube.ExecCommands: Ran command [mount -o rw,inode64,noatime,nouuid /dev/mapper/vg_ccc135aa56ab4f89868d9755bd531a22-brick_b6411ccff63daf1270bc9f354ca484dd /var/lib/heketi/mounts/vg_ccc135aa56ab4f89868d9755bd531a22/brick_b6411ccff63daf1270bc9f354ca484dd] on [pod:glusterfs-77ghn c:glusterfs ns:glusterfs (from host:ceph2 selector:glusterfs-node)]: Stdout []: Stderr [] [kubeexec] DEBUG 2020/03/06 16:45:14 heketi/pkg/remoteexec/kube/exec.go:81:kube.ExecCommands: Ran command [mount -o rw,inode64,noatime,nouuid /dev/mapper/vg_63f644b972ff7a04259395f67c149cf2-brick_777c447835963ef4db7cbb2392c85e59 /var/lib/heketi/mounts/vg_63f644b972ff7a04259395f67c149cf2/brick_777c447835963ef4db7cbb2392c85e59] on [pod:glusterfs-mhsgb c:glusterfs ns:glusterfs (from host:ceph3 selector:glusterfs-node)]: Stdout []: Stderr [] [kubeexec] DEBUG 2020/03/06 16:45:14 heketi/pkg/remoteexec/kube/exec.go:81:kube.ExecCommands: Ran command [mkdir /var/lib/heketi/mounts/vg_ccc135aa56ab4f89868d9755bd531a22/brick_b6411ccff63daf1270bc9f354ca484dd/brick] on [pod:glusterfs-77ghn c:glusterfs ns:glusterfs (from host:ceph2 selector:glusterfs-node)]: Stdout []: Stderr [] [kubeexec] DEBUG 2020/03/06 16:45:14 heketi/pkg/remoteexec/kube/exec.go:81:kube.ExecCommands: Ran command [mkdir /var/lib/heketi/mounts/vg_63f644b972ff7a04259395f67c149cf2/brick_777c447835963ef4db7cbb2392c85e59/brick] on [pod:glusterfs-mhsgb c:glusterfs ns:glusterfs (from host:ceph3 selector:glusterfs-node)]: Stdout []: Stderr [] [kubeexec] DEBUG 2020/03/06 16:45:14 heketi/pkg/remoteexec/kube/exec.go:81:kube.ExecCommands: Ran command [mount -o rw,inode64,noatime,nouuid /dev/mapper/vg_6199228451001048c7543f41ce6572cb-brick_98700f7b0bce70eb29279fb275763704 /var/lib/heketi/mounts/vg_6199228451001048c7543f41ce6572cb/brick_98700f7b0bce70eb29279fb275763704] on [pod:glusterfs-fflbn c:glusterfs ns:glusterfs (from host:ceph1 selector:glusterfs-node)]: Stdout []: Stderr [] [kubeexec] DEBUG 2020/03/06 16:45:14 heketi/pkg/remoteexec/kube/exec.go:81:kube.ExecCommands: Ran command [mkdir /var/lib/heketi/mounts/vg_6199228451001048c7543f41ce6572cb/brick_98700f7b0bce70eb29279fb275763704/brick] on [pod:glusterfs-fflbn c:glusterfs ns:glusterfs (from host:ceph1 selector:glusterfs-node)]: Stdout []: Stderr [] [cmdexec] INFO 2020/03/06 16:45:14 Creating volume heketidbstorage replica 3 [negroni] 2020-03-06T16:45:15Z | 200 | 42.505µs | 192.168.186.10:30080 | GET /queue/5ffdc4ab574897e19511ae43afa7e78c [kubeexec] DEBUG 2020/03/06 16:45:15 heketi/pkg/remoteexec/kube/exec.go:81:kube.ExecCommands: Ran command [gluster --mode=script --timeout=600 volume create heketidbstorage replica 3 192.168.186.11:/var/lib/heketi/mounts/vg_ccc135aa56ab4f89868d9755bd531a22/brick_b6411ccff63daf1270bc9f354ca484dd/brick 192.168.186.10:/var/lib/heketi/mounts/vg_6199228451001048c7543f41ce6572cb/brick_98700f7b0bce70eb29279fb275763704/brick 192.168.186.12:/var/lib/heketi/mounts/vg_63f644b972ff7a04259395f67c149cf2/brick_777c447835963ef4db7cbb2392c85e59/brick] on [pod:glusterfs-77ghn c:glusterfs ns:glusterfs (from host:ceph2 selector:glusterfs-node)]: Stdout [volume create: heketidbstorage: success: please start the volume to access data ]: Stderr [] [kubeexec] DEBUG 2020/03/06 16:45:15 heketi/pkg/remoteexec/kube/exec.go:81:kube.ExecCommands: Ran command [gluster --mode=script --timeout=600 volume set heketidbstorage user.heketi.id 17a63c6483a00155fb0b48bb353b9c7a] on [pod:glusterfs-77ghn c:glusterfs ns:glusterfs (from host:ceph2 selector:glusterfs-node)]: Stdout [volume set: success ]: Stderr [] [negroni] 2020-03-06T16:45:16Z | 200 | 33.664µs | 192.168.186.10:30080 | GET /queue/5ffdc4ab574897e19511ae43afa7e78c [negroni] 2020-03-06T16:45:17Z | 200 | 30.885µs | 192.168.186.10:30080 | GET /queue/5ffdc4ab574897e19511ae43afa7e78c [negroni] 2020-03-06T16:45:18Z | 200 | 101.832µs | 192.168.186.10:30080 | GET /queue/5ffdc4ab574897e19511ae43afa7e78c [kubeexec] DEBUG 2020/03/06 16:45:19 heketi/pkg/remoteexec/kube/exec.go:81:kube.ExecCommands: Ran command [gluster --mode=script --timeout=600 volume start heketidbstorage] on [pod:glusterfs-77ghn c:glusterfs ns:glusterfs (from host:ceph2 selector:glusterfs-node)]: Stdout [volume start: heketidbstorage: success ]: Stderr [] [asynchttp] INFO 2020/03/06 16:45:19 asynchttp.go:292: Completed job 5ffdc4ab574897e19511ae43afa7e78c in 5.802125511s [negroni] 2020-03-06T16:45:19Z | 303 | 42.089µs | 192.168.186.10:30080 | GET /queue/5ffdc4ab574897e19511ae43afa7e78c [negroni] 2020-03-06T16:45:19Z | 200 | 50.078742ms | 192.168.186.10:30080 | GET /volumes/17a63c6483a00155fb0b48bb353b9c7a [negroni] 2020-03-06T16:45:19Z | 200 | 484.808µs | 192.168.186.10:30080 | GET /backup/db

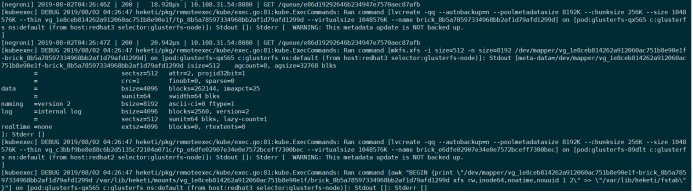

k8s是怎么通过heketi创建pvc的 storageclass中会指定heketi server端的地址和卷的类型(replica 3),用户通过pvc创建1G的pv,观查heketi服务后台干了啥:

首先发现heketi接收到请求后起了一个job,创建了3个bricks,在其中三台gfs节点创建了相应的目录,如下图:

创建lv,添加自动挂载:

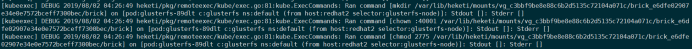

创建brick,设置权限:

创建volume:

参考文档 heketi: https://github.com/heketi/heketi

glusterfs: https://github.com/gluster/gluster-kubernetes